Ohbot – Real-Time Face Tracking and AI Response Robot

Ohbot is a robotic face structure equipped with multiple servo motors that control the movement of key facial components such as the eyes, lips, eyelashes, and neck. The robot uses advanced facial recognition technology to detect, track, and follow human faces in real-time. Ohbot can adjust its gaze to match the movement of the person’s face (whether right, left, up, or down), creating an interactive experience. Additionally, Ohbot is integrated with OpenAI, which allows it to intelligently answer user questions. Its lip movements are synchronized with the speech output, providing a lifelike and engaging interaction. The combination of AI, real-time face tracking, and precise servo movements allows Ohbot to create a highly interactive and natural communication experience.

Objectives:

-

To develop Ohbot’s ability to track human facial movements in real time

The core functionality of Ohbot is its ability to detect and follow human faces using facial recognition technology. This ensures that the robot remains engaged with the user by constantly adjusting its gaze to match the user’s head movements, maintaining a sense of connection. -

To integrate OpenAI for providing intelligent responses to user questions

By incorporating OpenAI, Ohbot can understand and respond to complex user queries. This AI-driven response system allows for natural, meaningful conversations, adding depth to the interaction. -

To synchronize Ohbot’s lip movements with its speech for a realistic interaction

One of the key objectives is to ensure that Ohbot's lip movements are perfectly synchronized with its speech output. This is critical for creating the illusion of a real conversation and enhancing the overall interactive experience. -

To combine advanced face-tracking and AI technologies into a cohesive, interactive robot

Ohbot brings together facial recognition, AI-based natural language processing, and precise servo control to create a seamless, interactive robotic platform that can be used in various fields like customer service, education, and entertainment.

Key Features:

-

Face Recognition:

Ohbot’s real-time face recognition allows it to detect and track human faces, ensuring that it remains focused on the user during interactions. The robot can follow head movements dynamically, creating a natural sense of engagement. -

Servo Control:

The precise movements of Ohbot’s eyes, lips, eyelashes, and neck are controlled via servo motors. These servos allow Ohbot to mimic human expressions and head movements, making the robot appear more lifelike and responsive. -

OpenAI Integration:

Ohbot is integrated with OpenAI’s powerful language model, enabling it to process natural language inputs and provide contextually appropriate responses. This allows the robot to engage in conversations with users and respond intelligently to a wide range of queries. -

Lip Syncing:

One of the most advanced features of Ohbot is its ability to move its lips in perfect synchronization with its speech. This feature enhances the naturalness of the robot’s interaction with users, making it feel like a real conversation. -

Dynamic Gaze Control:

Ohbot’s eyes are designed to move in sync with its facial tracking system. As the user moves, Ohbot dynamically adjusts its gaze, maintaining eye contact and enhancing the feeling of human-like interaction.

Application Areas:

-

Human-Robot Interaction:

Ohbot significantly improves human-robot interaction by offering a more lifelike experience through facial tracking, dynamic gaze, and synchronized speech. This makes it ideal for environments where realistic engagement is important, such as in social robotics or companionship applications. -

Customer Service:

With its ability to answer questions using OpenAI, Ohbot can serve as a customer service representative. The robot’s lifelike interaction capabilities make it suitable for environments like retail, hospitality, or even online support, providing users with a more engaging experience. -

Education:

Ohbot can be used as an educational assistant, interacting with students in real-time, answering questions, and explaining complex topics through conversational AI. Its lifelike appearance and interactive features make learning more engaging and accessible. -

Entertainment:

Ohbot can be programmed for storytelling or gaming applications, where lifelike interactions are essential for immersion. Its dynamic facial expressions and AI-driven responses allow for rich, entertaining experiences. -

Research & Development:

Ohbot is also ideal for researchers looking to explore the intersection of AI, robotics, and human-robot interaction. Its integration of advanced technologies makes it an excellent platform for developing new applications in the field of intelligent robotics.

Detailed Working of Ohbot:

1. Face Detection and Tracking:

Ohbot employs a face recognition algorithm to detect and track a user’s face in real-time. The system can recognize multiple faces and focus on the most relevant one based on proximity or activity. As the user moves their head, the servos controlling Ohbot’s eyes and neck adjust to keep the robot’s gaze locked on the user’s face.

-

Servo-Driven Eye Movement:

The servos controlling Ohbot’s eyes are programmed to mimic the movement of human eyes, ensuring that Ohbot maintains direct eye contact with the user. The movement is fluid and adjusts according to the user's position. -

Neck Movement:

The neck servos allow Ohbot to turn its head left, right, up, and down, mirroring the user’s head movements. This feature helps to maintain a natural and lifelike interaction by adjusting the robot’s posture dynamically. -

Facial Tracking Accuracy:

Ohbot uses a combination of computer vision and machine learning techniques to track facial landmarks, ensuring high accuracy in following the user’s face even in environments with varying lighting or multiple users.

2. Speech Recognition and Processing:

Ohbot processes spoken inputs from the user using speech recognition algorithms. These inputs are passed to OpenAI’s language model, which processes the query and generates an appropriate response.

-

Natural Language Processing:

Ohbot’s ability to understand natural language allows it to answer a wide range of user questions. The integration with OpenAI ensures that the responses are contextually relevant and provide meaningful information. -

Voice Command Execution:

Ohbot can also respond to direct voice commands, enabling it to perform tasks such as answering FAQs, providing information, or even controlling other devices in smart environments. -

Real-Time Response:

The combination of real-time speech recognition and OpenAI’s language processing ensures that Ohbot can provide instant responses during a conversation, making interactions feel fluid and natural.

3. Lip Syncing:

As Ohbot speaks, its lips move in perfect synchronization with the audio output. This is achieved by mapping the phonemes of the speech to specific lip movements, creating a realistic representation of talking.

-

Phoneme-Based Lip Movement:

The robot’s lip movements are based on the phonetic components of the speech. As different sounds are produced, the servos controlling the lips adjust accordingly to match the shape of a human mouth during speech. -

Synchronized Expression:

Ohbot’s lips not only sync with the speech but also adjust the overall facial expression to match the tone of the conversation. For example, when speaking with enthusiasm, the lips move more dynamically, while slower speech results in subtler movements.

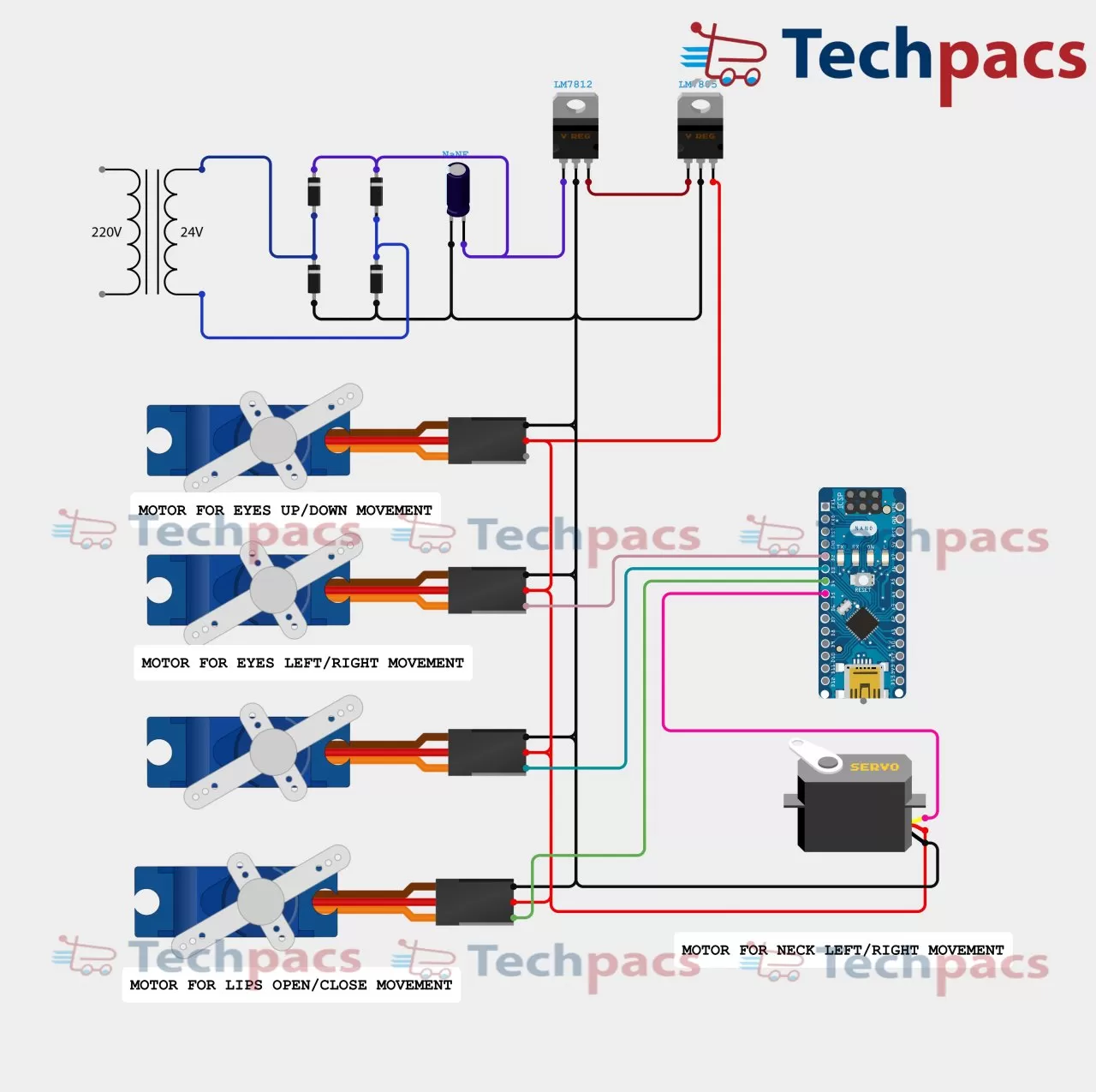

4. Servo Control:

The servo motors that control Ohbot’s facial movements are highly precise, allowing for fine control over the robot’s expressions. These servos are responsible for moving the eyes, lips, neck, and eyelashes in a coordinated manner.

-

Eye Movement:

The servos controlling Ohbot’s eyes adjust their position based on facial tracking data, ensuring that the robot’s gaze follows the user’s movements. The fluidity of these movements is crucial for creating a natural interaction. -

Neck and Head Movements:

The neck servos provide additional realism by allowing Ohbot to tilt its head or turn it towards the user as they move. This feature enhances the sense of engagement and attention during conversations. -

Eyelash and Lip Control:

Ohbot can blink its eyes or purse its lips to add subtle expressions to the conversation, further improving the robot’s lifelike appearance. -

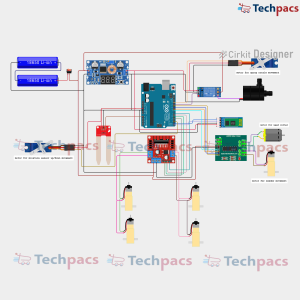

Modules Used to Make Ohbot:

-

Face Recognition Module:

This module uses computer vision algorithms to detect and track human faces in real-time. It allows Ohbot to stay focused on the user, ensuring smooth interactions. -

Servo Motor Control Module:

Controls the precise movements of the servos that drive Ohbot’s facial components, including the eyes, lips, eyelashes, and neck. This module allows for smooth, natural movements. -

Speech Processing (OpenAI Integration):

Handles the conversation aspect of Ohbot’s functionality. This module processes the user’s spoken input and generates responses using OpenAI’s language model. -

Lip Syncing Mechanism:

Ensures that the robot’s lip movements are synchronized with its speech. The mechanism converts the phonetic components of the speech into corresponding lip movements. -

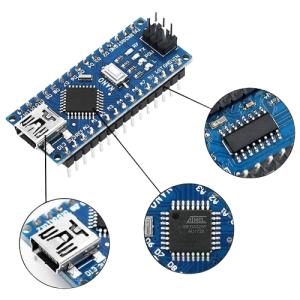

Microcontroller (e.g., ESP32/Arduino):

Controls the servo motors and processes inputs from the facial recognition and speech systems. It acts as the main processing unit that manages Ohbot’s movements and interactions. -

Python Libraries:

Python is used to integrate various components like face tracking, speech recognition, and motor control. Popular libraries such as OpenCV are used for real-time facial detection, while PySerial and other libraries handle servo control.

Other Possible Projects Using the Ohbot Project Kit:

-

AI-Powered Interactive Assistant:

Expand Ohbot’s capabilities into a full-fledged home or office assistant. By leveraging its facial tracking, conversational AI, and servo-controlled expressions, you can develop Ohbot into an intelligent assistant that can perform tasks such as scheduling appointments, answering questions, controlling smart home devices, and providing personalized information. Its ability to maintain eye contact and communicate in a lifelike manner makes it a highly engaging assistant for any environment. -

Telepresence Robot:

Utilize Ohbot’s face-tracking and interaction capabilities for telepresence applications. With additional integration of video streaming technologies, Ohbot could act as the "face" for a remote user during meetings or conferences. The remote user’s face could be projected onto Ohbot’s face while the robot's servos replicate their head and eye movements, creating a more immersive telepresence experience. -

Emotion-Sensing Ohbot:

Extend Ohbot’s face tracking with emotional recognition capabilities. By incorporating emotion detection algorithms, Ohbot could analyze facial expressions to determine the user's emotional state and respond accordingly. For example, if a user appears frustrated or sad, Ohbot could offer words of encouragement or helpful suggestions. -

Interactive Storytelling Robot:

Transform Ohbot into a storytelling robot by integrating it with a database of stories, interactive dialogue scripts, and animation. Ohbot could narrate stories to children or adults while using facial expressions, lip-syncing, and eye movement to enhance the storytelling experience. You could further customize Ohbot to allow users to ask questions or make decisions that influence the direction of the story, creating an interactive narrative experience. -

AI-Driven Customer Support Representative:

Develop Ohbot into an interactive customer support robot for businesses, able to answer frequently asked questions, guide users through common issues, or provide detailed product information. With its facial tracking, Ohbot can make the interaction more personal by maintaining eye contact, mimicking human gestures, and responding intelligently to customer queries via OpenAI.

| Shipping Cost |

|

No reviews found!

No comments found for this product. Be the first to comment!