NLP-Based Speech Recognition Prosthetic Hand Using ESP32 and Arduino

In the modern world where technology seamlessly integrates with daily life, advanced prosthetic solutions are revolutionizing the lives of individuals with limb loss or limb differences. The "NLP-Based Speech Recognition Prosthetic Hand Using ESP32 and Arduino" project leverages natural language processing (NLP) and speech recognition technology to enhance the functionality of prosthetic hands. This innovative approach allows users to control the prosthetic hand effortlessly using voice commands, thus offering a more intuitive and user-friendly experience. The use of ESP32 and Arduino ensures a cost-effective and reliable solution that can significantly improve the quality of life for individuals requiring prosthetic hand devices.

Objectives

To design and implement a prosthetic hand that can be controlled via voice commands using NLP techniques.

To enhance the functionality and ease-of-use of prosthetic hands with real-time speech recognition.

To integrate an ESP32 microcontroller for wireless capabilities and efficient processing.

To ensure the system is cost-effective and easily replicable for broader accessibility.

To validate the prosthetic hand's performance through field tests and user feedback.

Key Features

1. Voice Command Control: Operate the prosthetic hand using simple voice commands for ease of use.

2. Real-time Processing: The ESP32 microcontroller ensures quick and efficient response to voice commands.

3. Cost-Effective: Utilizes affordable components like the Arduino and ESP32, making it accessible.

4. Wireless Capabilities: ESP32 provides Bluetooth and Wi-Fi connectivity for versatile applications.

5. User-Friendly Interface: Simple, intuitive interface for users to issue voice commands effortlessly.

Application Areas

The NLP-Based Speech Recognition Prosthetic Hand has wide-ranging application areas in the field of healthcare and rehabilitation. It serves as a vital assistive technology for individuals who have lost their hands due to accidents, illnesses, or congenital conditions. The prosthetic hand can be used in daily activities, helping users perform tasks that require fine motor skills, such as picking up objects, typing, and personal care tasks, thus significantly enhancing their independence and quality of life. Moreover, its user-friendly voice command feature makes it particularly suitable for elderly users or those with additional physical limitations, ensuring that the technology is inclusive and accessible for all.

Detailed Working of NLP-Based Speech Recognition Prosthetic Hand Using ESP32 and Arduino :

Imagine a world where advanced technology bridges the gap between human potential and physical limitations. The NLP-Based Speech Recognition Prosthetic Hand is one such innovation that harnesses the power of cutting-edge electronics. This project integrates Natural Language Processing (NLP), speech recognition, and microcontrollers to create a prosthetic hand that responds to voice commands, enhancing the quality of life for individuals with disabilities.

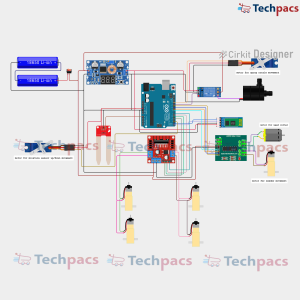

At the heart of this innovative project, the ESP32 microcontroller serves as the central processing unit. The ESP32, known for its robust processing capabilities and built-in Wi-Fi and Bluetooth connectivity, is connected to an Arduino board, creating a seamless interface between the speech recognition module and the servo motors of the prosthetic hand. This dual-board setup ensures that voice commands are accurately interpreted and relayed to the hand's mechanical components.

The power supply unit is crucial in ensuring the circuit's smooth operation. A step-down transformer converts the 220V AC mains supply to a safer 24V AC. This 24V AC is then rectified and stabilized using a bridge rectifier, capacitors, and voltage regulators (LM7812 and LM7805) to provide steady 12V and 5V DC outputs. The 5V DC is particularly essential for powering the ESP32, the Arduino board, and the servo motors that actuate the prosthetic hand.

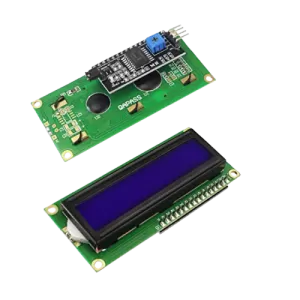

In this setup, the LCD screen connected to the ESP32 provides a user-friendly interface. This display shows real-time feedback, including system status and recognized voice commands, ensuring the user is always informed about the prosthetic hand's operation. The LCD receives power and data signals directly from the ESP32, with the necessary connections established through appropriate GPIO pins.

The speech recognition module plays a pivotal role in this project. It captures voice commands from the user, processes them into textual data, and sends them to the ESP32 for further NLP processing. The ESP32, equipped with NLP algorithms, understands the context of the commands and translates them into specific actions. For instance, commands such as "open hand" or "close hand" are processed and matched to corresponding motor actions.

The servo motors, which represent the prosthetic fingers and joints, are critical components. The ESP32 sends precise PWM signals to each servo motor based on the interpreted voice commands. These signals determine the angle and movement of each motor, enabling the prosthetic hand to perform complex tasks such as gripping objects or gesturing. The servos are powered by the 5V DC supply, ensuring reliable and consistent performance.

To achieve this, each servo motor is meticulously wired to the ESP32. The signal wires are connected to distinct GPIO pins, while the power and ground wires are connected to the regulated power supply. This setup allows for fine control over each motor's movement, ensuring synchronized and natural hand motions. The precision of the PWM signals from the ESP32 ensures that each servo responds accurately to the intended command.

Safety is paramount in this design. The LM7812 and LM7805 voltage regulators are responsible for maintaining stable power outputs, preventing voltage fluctuations that could damage sensitive components like the ESP32 and Arduino board. Additionally, capacitors filter any residual AC ripples, ensuring a clean DC supply. This stable power ensures the longevity and reliability of the entire system, from the sophisticated electronics to the mechanical movements.

In summary, the NLP-Based Speech Recognition Prosthetic Hand is a marvel of modern engineering. The ESP32 and Arduino work in tandem to interpret voice commands and actuate the servo motors, creating a seamless experience for the user. From the initial power conversion to the precise motor control, every component plays a vital role in bringing this innovative prosthetic hand to life, showcasing the incredible potential of combining NLP and robotics in assistive technologies.

Modules used to make NLP-Based Speech Recognition Prosthetic Hand Using ESP32 and Arduino :

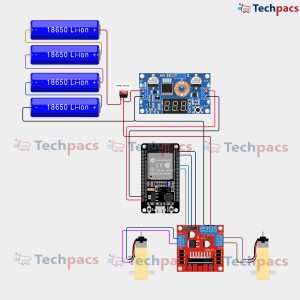

1. Power Supply Module

The power supply module is responsible for providing the necessary electrical power to all components of the project. In this circuit, a step-down transformer initially converts the high 220V AC voltage to a much safer 24V AC. This is then rectified and filtered to generate a stable DC voltage, which powers the ESP32 microcontroller and other connected components. The power supply must maintain consistent voltage levels to ensure reliable operation and prevent damage to the sensitive electronics. Proper regulation and filtration are crucial for ensuring the microcontroller and servo motors operate without noise and fluctuations.

2. ESP32 Microcontroller Module

The ESP32 microcontroller module serves as the brain of this speech recognition-based prosthetic hand. It is responsible for processing voice commands, converting them into actions, and controlling the servo motors to move the prosthetic hand accordingly. The ESP32 is programmed to recognize specific speech commands using a trained NLP (Natural Language Processing) model. Once it identifies a valid command, it sends appropriate control signals to the servo motors to initiate movement. The Wi-Fi and Bluetooth capabilities of the ESP32 also allow for future enhancements like remote control or updates over-the-air.

3. Speech Recognition Module

The speech recognition module is an integral part of this project, responsible for capturing and interpreting vocal commands. Although the diagram does not explicitly show a dedicated speech recognition hardware, this functionality is likely software-based, running on the ESP32 microcontroller. The ESP32 captures audio via an attached microphone, processes it through an NLP model, and then interprets the spoken words into actionable commands. This software module is critical for converting human speech into digital signals that can be further processed to control the prosthetic hand.

4. Servo Motors Module

The servo motors are the actuating components responsible for the physical movements of the prosthetic hand. In the diagram, multiple servo motors are connected to the ESP32 microcontroller. Each motor is associated with a specific finger or joint in the prosthetic hand. When the ESP32 sends a control signal to a servo motor, it rotates to a specific angle, resulting in the desired movement, such as closing or opening a finger. The precise control of these motors is crucial for the accurate and fluid movement of the prosthetic hand, mimicking natural hand motions as closely as possible.

5. User Interface Module

The user interface module, represented by the LCD display in the circuit diagram, provides real-time feedback to the user. This LCD screen displays information such as the recognized speech command, operational status, and any errors or alerts. It serves as a critical communication bridge between the system and the user, ensuring that the user is constantly informed about the system’s state. This feedback loop is essential for debugging purposes and for providing intuitive control to the user, enhancing the overall user experience and functionality of the prosthetic hand.

Components Used in NLP-Based Speech Recognition Prosthetic Hand Using ESP32 and Arduino :

Power Supply Module

220V to 24V Transformer: Converts the main AC voltage (220V) to a lower AC voltage (24V) suitable for the circuit.

LM7812 Voltage Regulator: Regulates the 24V to a steady 12V DC required for certain components.

LM7805 Voltage Regulator: Regulates the 12V to a steady 5V DC necessary for several modules including the ESP32.

Capacitors: Used for smoothing the output of the voltage regulators.

Control Module

ESP32: The main controller used for processing speech recognition algorithms and controlling the servo motors.

Display Module

16x2 LCD Display: Displays the status and information related to the speech recognition and hand movements.

Actuator Module

Servo Motors: Four servo motors are used to actuate different joints of the prosthetic hand.

Other Possible Projects Using this Project Kit:

1. Voice-Controlled Home Automation System

Using the same NLP-based ESP32 and Arduino setup, you can create a comprehensive home automation system that responds to voice commands. Integrate various home appliances like lights, fans, and air conditioners by connecting relays to the ESP32. By employing speech recognition, you can conveniently control these devices. For example, you could turn on the lights or adjust the room temperature through simple verbal instructions. This project combines ease of use and improved home efficiency, making everyday living more comfortable and futuristic. Moreover, advancements in NLP can offer more personalized and accurate responses.

2. Smart Wheelchair with Voice Commands

This project transforms a regular wheelchair into a smart, voice-controlled mobility aid. Using the ESP32 and Arduino along with motors and a voice recognition module, you can enable the wheelchair to respond to commands like "move forward," "turn left," or "stop." This integration not only simplifies mobility for individuals with disabilities but also significantly enhances their independence and quality of life. The setup can also include features like obstacle detection using sensors to prevent collisions, making the wheelchair not only smart but also safe.

3. Interactive Voice-Controlled Robot

By leveraging the same components, you can build an interactive robot that understands and follows voice commands. This robot can be programmed to perform various tasks like picking up objects, navigating an area, or even performing simple chores. Using the servo motors and the ESP32, the robot can have articulating arms and a head, making it more interactive and responsive. This kind of project is perfect for educational purposes, demonstrating robotics, IoT, and artificial intelligence concepts in a hands-on manner.

4. Automated Voice-Controlled Gardening System

This innovative project involves creating a voice-activated gardening system. Using the ESP32, solenoid valves, and soil moisture sensors, you can automate the watering process. By using voice commands, you can instruct the system to water the plants, check soil moisture levels, and even control the greenhouse environment. This project not only ensures your plants are well-watered and healthy but also saves water by being precise about the watering schedule. It is an excellent tool for both hobbyist gardeners and those seeking to implement smart agricultural practices.

5. Voice-Controlled Personal Assistant

You can develop a voice-controlled personal assistant that can perform various tasks like setting reminders, making calls, sending messages, or even controlling other smart devices. Implementing the ESP32 and Arduino technology, combined with NLP, allows for a responsive and interactive assistant. This project can be further expanded with cloud services for more advanced functionalities such as fetching weather updates, news, or playing music. This assistant can be an essential part of a smart home, seamlessly integrating various smart applications for an enhanced user experience.

| Shipping Cost |

|

No reviews found!

No comments found for this product. Be the first to comment!